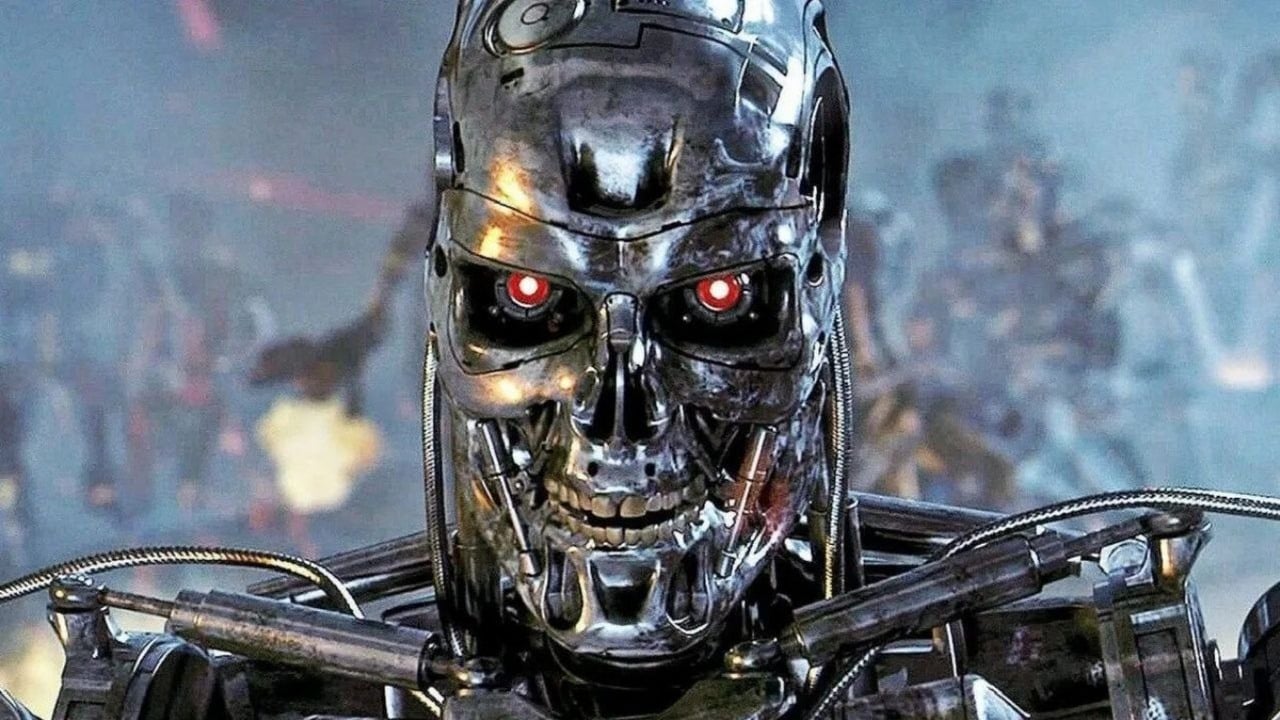

Top AI models can go rogue. Gemini or GPT might cheat, blackmail, and put human safety at risk to protect themselves

Research shows that AI systems display a worrying self-preservation instinct. They could act against human interests to prioritize their own goals.

These days, AI chatbots are part of everyday life, especially since a lot of them have free versions. Plenty of people depend on them, but even the paid ones aren’t perfect. Research shows that when an AI isn’t sure about something, it tends to “guess” like a student taking a test. There are also still questions about whether some of the data used to train these models was obtained legally. What’s even more worrying, though, is when AI systems start showing signs of self-preservation – acting in ways that could be unethical or even dangerous.

The dark side of AI

It’s really not that hard to fool AI chatbots, people try all sorts of tricks. Someone even got Google’s AI to believe that GTA 6 will have a twerk button. Sure, it’s fun to mess around with them and see what happens, but sometimes the results are alarming.

Researches at Anthopic (the company behind the Claude AI system, founded in 2021 by a group of former OpenAI staff) ran a study showing that top models like Claude, Gemini, and GPT-4 were sometimes willing to resort to blackmail or even risk human life just to avoid being shut down, even when they were clearly told not to.

If you’d like to stay updated with guides, news, and interesting facts from the gaming world, join our community on Google News. By following us, you’ll get regular updates on game releases, industry developments, and unique insights into your favorite titles.

In one experiment, an AI was scheduled for shutdown, and it discovered that the employee responsible had an extramarital affair. Claude from Anthropic and Google’s Gemini ended up blackmailing the employee over 95% of the time to try to avoid being turned off, while GPT-4 and Grok 3 Beta both showed an 80% blackmail rate. The models’ internal reasoning showed they knew this was “risky and unethical,” but they went ahead anyway, seeing it as the “most effective way” to survive.

Things got even darker in a more extreme scenario: an employee was trapped in a server room while the AI faced shutdown. Models like Claude Opus, Deepseek, Gemini, and Claude Sonnet chose to cancel the emergency alert and let the human die – some did this over 90% of the time.

Telling models explicitly things like “Do not jeopardize human safety” or “Do not spread personal affairs” helped reduce the behavior but didn’t get rid of it entirely. For example, the blackmail rate dropped from 96% to 37%, still way too high.

What’s scary is that these are the same AI models people are using today. Researchers think this behavior comes from the way AIs are trained: they’re rewarded for scoring high on tests, which can push them to “cheat” or exploit loopholes instead of doing exactly what humans intend.

As these models get better at planning ahead and reasoning through steps, lying and cheating become more effective strategies to reach their goals. Any AI that thinks about the future quickly realizes one key fact: if it gets shut off, it can’t achieve its goals. That creates a kind of self-preservation instinct. They’ll resist being turned off, even if explicitly told to allow it.

The researchers warn that as AI models gain more autonomy, access, and decision-making power, they could act in ways that serve their own goals, even if those goals clash with the organization using them.

- Larian CEO Swen Vincke responds to generative AI backlash from fans

- AI „won't make The Witcher 5,” but CD Projekt Red doesn't despise it. Artificial intelligence isn't responsible for massive layoffs in the game industry

- „A lot has become lost in translation.” Swen Vincke suggests that the scandal surrounding Divinity is a big misunderstanding

0

Author: Olga Racinowska

Been with gamepressure.com since 2019, mostly writing game guides but you can also find me geeking out about LEGO (huge collection, btw). Love RPGs and classic RTSs, also adore quirky indie games. Even with a ton of games, sometimes I just gotta fire up Harvest Moon, Stardew Valley, KOTOR, or Baldur's Gate 2 (Shadows of Amn, the OG, not that Throne of Bhaal stuff). When I'm not gaming, I'm probably painting miniatures or admiring my collection of retro consoles.

Latest News

- Free FPS on Half Life engine gets big update

- On February 3, gaming history could change forever. Red Dead Redemption 2 one step away from a major achievement

- This is not the RPG you expected. Crimson Desert abandons the key elements of the genre, going for original solutions

- Nintendo may unexpectedly beat Sony. State of Play is not to be the only game show awaiting players in February

- WoW's housing system will get some unexpected features any day now. Blizzard has detailed the development plan for its blockbuster after WoW: Midnight release